ALLOSAUR

Recently I (and others) finished a paper on accumulators. Since the paper is ridiculously long, this post summarizes the main ideas and problems we wanted to solve.

There's been a recent push for digital credentials. The idea is to take the various credentials you use in day-to-day life and replace them with digital versions.

As a few examples of the kind of credentials we're talking about:

- an ID card used to prove your age to buy restricted substances (alcohol, tobacco, marijuana, firearms)

- a license to drive, fish, hunt

- a professional certification to practice medicine, accounting, car repair

- a certification that you had a COVID vaccine

- a parking permit

- an ID used to vote

- register to use an online service

The purported benefits are convenience and, in some cases, better privacy and security for you. Two flagship examples in the space seem to be:

- "Selective disclosure", especially for ID cards. Many countries are afflicted with such egregious car culture that people use their driver's license as a national ID, and use it to, e.g., get into a bar. But a driver's license has all kinds of information you don't want to reveal to a bar bouncer, such as your home address and your full name.

- "Self-sovereign ID" where you manage your own credentials to do things like register for websites, rather than rely on something like your Google account. Someone still needs to give you those credentials, though; the difference is that you keep them on your devices, rather than contacting (e.g.) Google every time.

But generally, shifting from physical to digital credentials creates a different set of privacy risks. If you run a liquor store and want to track everyone who presents their ID to you, you need to have a quick memory, because you would need to write down all your details after they show you their ID (and without them noticing!). But with a digital credential, your computer system which checks the credentials can easily log this interaction without the customer having any idea. And since it's already digitized, it's cheap and easy to aggregate this data.

As described, this is actually solved almost completely, since you can just use a zero-knowledge proof. Recording a zero-knowledge proof should give you no user-specific information.

So, why did we write a 170 page paper about anonymity?

To explain that we have to talk about revoking credentials. For example, if someone has a driving license but drives drunk, their driving license must be revoked. Yet if it's a digital credential, how do we delete it? What if they made back-ups? How does someone like a police officer, checking a zero-knowledge proof of the validity of the driver's license, check that it hasn't been revoked?

For a driver's license, the digital credentials would be issued by some branch of the government. This branch would also release something like a public key, against which the digital credentials will be compared. If someone's driver's license is revoked, this public key must change: if not, then the revoked digital credential will still appear valid, since all inputs to the validation protocol will be the same!

But changing your public key every time you revoke a credential feels like a bad idea, because then everyone who should still have a valid license would need a new credential! We could try other techniques -- like having the verifier keep a list of revoked credentials and having users prove they're not in that list (problem: lots of storage for the verifier), or having an expiry date for each credential (problem: can't revoke in between expiry dates, and still requires re-issuing a lot of credentials) -- but another solution is:

A CRYPTOGRAPHIC ACCUMULATOR

While accumulators originally had different structure and motivation, their use now (and especially in digital credentials) is not much different than a digital signature. There is an "accumulator value" that acts as a public key, and a user gives some "ID" to the accumulator manager, who gets a "witness" in return (all of these are just pieces of data). A user can produce a zero-knowledge-proof that their witness is valid, allowing them to prove that they have the required credential.

The IDs in the accumulator can be constantly added or removed, but when they are removed, the accumulator value itself must change, and hence users must update their witnesses. Again, if this was just a digital signature (and so far, digital signatures do everything an accumulator can do), this would require re-issuing a witness. But for accumulators, there are efficient update methods.

How efficient? Well, not great. Our work started by considering a pairing-based accumulator of Nguyen, and the update protocol of Vitto and Biryukov. Their update is quite nice, but unfortunately an update over 10,000 changes took something like 14 seconds and sends 112 kB of data.

To escape this update time, one option is -- in fact -- to just re-issue signatures. That's the approach of this paper on multi-party revocation in Sovrin, who use MPC to share trust over the secret key. But their update means users must send their ID directly to the servers to get a signature. Is this a problem?

Consider this scenario. It's a magical digital credential future and you park your car downtown, where it instantly starts a handshake over bluetooth with the nearby parking meter to anonymously prove that it has a valid parking permit. Unfortunately, the parking meter says: your credential is out-of-date. It was last updated yesterday, but I have an accumulator value from today. You need to update.

So your car decides to update. Who offers parking permits? Likely, the city or police, so your car connects to the internet and sends its ID to the police. Maybe this is pseudonymous, maybe not; generally, someone will need to connect IDs to real-world attributes to know which IDs to revoke in response to real-world problems. So now the police know that your car, at 13:10 on Thursday October 13th, needs an updated credential. Since your permit is still valid (according to some internal police database), you get your new credential.

Then your car sends this new credential to the parking meter. But now, the parking meter does something sinister: it sends a message to the police saying: I am the parking meter at 4368 Main street. Someone just presented a valid credential at 13:11 on Thursday October 13th.

The server at the police station compares these two events and says: aha, these were probably the same. This means your car was at 4368 Main Street at 13:10 on Thursday October 13th. Now the police know exactly where and when everyone is driving (and in many places, this would also tell them where everyone is) with no warrant or justification.

The conclusion is: updates are also a privacy risk.

Can we do updates privately? Absolutely! The updates of Vitto and Biryukov, mentioned earlier, do exactly that: they send an update polynomial which any user can use, and precisely those users with a valid ID that was not deleted will be able to update, all with the same polynomial (pretty cool, right? check out their paper!).

However, Camacho and Hevia proved some unpleasant lower bounds on this process. If you want your update to be anonymous, it can't be user-specific (i.e., the same data must go to all different users requesting an update). If it's not user-specific, then if there were N deletions, the update must send data whose size is proportional to N. It's a clever information-theoretic argument: if I have credentials for N different users, and you send me the update data, I can attempt to update all N different users. Which users fail and which users succeed will precisely tell me some specific subset of those users. The number of bits you need to accurately transmit a subset of a set of size N is proportional to N.

That's bad news, but we saw this and thought: an anonymous update could be user-specific, if it had some interaction. It's still not going to be great: the same argument as above, adapted to include interaction, shows that an anonymous update must be equivalent to private information retrieval, which is not easy. With ALLOSAUR we took an interactive approach and dropped the communication costs quite a bit while still being anonymous, although we need multi-party computation for this to work.

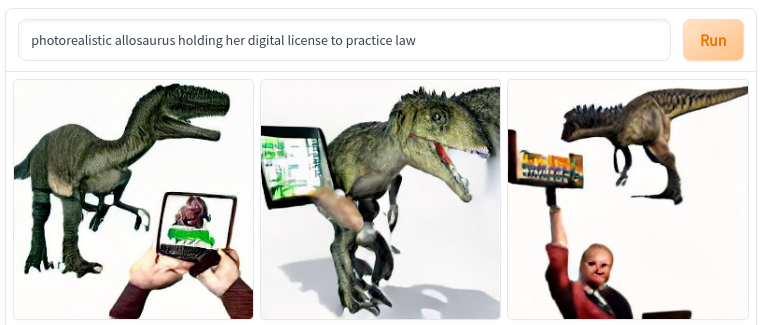

To summarize our results, we construct an accumulator for anonymous credentials that allows revocations, where the updates are low-latency (user-side computation is small), oblivious (the user sends no user-specific information), and has less communication than the linear lower bound. Hence: Accumulator with Low-Latency Oblivious Sublinear Anonymous credential Updates with Revocations.

So why all the pages?

FORMALISM

In the scenario with parking permits above, the police are handling requests from cars all over the city. So are the parking meters. Oh, and someone is using their laptop to send its own bluetooth signal to try to park for free; someone else has installed a fake parking meter to try to steal legitimate credentials. All of this is happening asynchronously, and the internal computational state of all of these players must change with each interaction, by design. In short, simple definitions weren't going to cut it.

In a bit more detail, we imagined a scenario with four types of players: an authority that decides who receives credentials and when they are revoked; some number of accumulator managers who actually host the accumulator and do the computations, users who obtain credentials and prove possession, and verifiers who verify the credentials.

For security, the threats we imagined included:

- accumulator managers trying to de-anonymize users

- accumulator managers trying to add credentials that shouldn't be added

- verifiers and accumulator managers colluding to try to de-anonymize users

- users trying to prove possession of credentials that they shouldn't be able to

- anyone at all trying to stop or stall the system

To capture all of these, we wanted an adversary who corrupted some of the accumulator managers and who managed an arbitrarily large number of corrupt users and verifiers. In practice, deploying this protocol would result in all of these different players running different computations simultaneously, engaged in multiple simultaneous protocols with each other. To formalize these notions was tricky: we need to define what messages are, where they go, how they are delivered, and so on. We assumed a public message board with guaranteed and ordered delivery; a consensus append-only ledger (dare I say: a blockchain?) could do this. This gets us most of the way.

From there, it's a drastic amount of work just to show that the whole thing works as it's supposed to without getting stuck. Basically we showed, from scratch, that the multi-party computation works. This seems a bit like overkill, given that MPC is well-developed already. However, we wanted to carefully track all the messages to ensure a great deal of anonymity; we weren't sure a black-box approach would give us all the control and information we needed about these things.

To summarize the length of the paper, this shows how long each section is. Most of it is just proofs of basic things, or laying out a basic formalism. MPC is complicated!

| Introduction, summary of main ideas, performance numbers |

|---|

| References |

| MPC Formalism |

| Security definitions |

| MPC functions and pseudocode |

| Full protocol and pseudoocode |

| Proofs that the MPC works correctly, doesn't stall, and identifies bad actors |

| Proof of soundness |

| Proof of anonymity |

ANONYMITY

Then we have to consider anonymity. It gets tricky.

First, how are users interacting with the system? If they're connecting with regular internet protocols, anonymous updates are immediately impossible. We have to assume they use something like TOR, and we model this formally by letting users use "pseudonyms" as addresses for each message. TOR might not be realistic (will a government produce an app that auto-connects to TOR for basic functionality? We can dream...), and I'll discuss later about a possible fix.

Next, what we actually want is that no one can correlate different interactions with the accumulator managers and verifiers. There are cases where you will deliberately de-anonymize yourself when using your credentials, such as if you used them as an ID to vote. (I don't condone this application, but someone might try it, and if they do, they will need your real identity to cross you off the list of voters). Once that happens, we want to ensure that this interaction cannot be related to any other interaction, or else you will only ever be as anonymous as your least anonymous use of your credentials. That's not good!

We might be tempted to define anonymity as this: an adversary should not be able to guess whether two different messages come from the same user or not. Except that this is practically impossible, for many reasons. A big reason is that users will constantly de-anonymize themselves on purpose.

Let's consider a few examples of this deliberate anonymity loss:

- If a user proves their credentials to an adversarial verifier, the verifier learns whether that user has a valid credential. Of course, the user wants the verifier to learn precisely this!

- If a user's credentials are out-of-date, and they need to update them before proving them to a verifier, then the update request and verification are highly correlatable by timing analysis (one of our main motivations)

- When a user requests an update, they need to specify how far back their update goes. As in, if their last update was last Friday, they need to say, "Please update me from last Friday to today". This seems sort of fundamental in accumulators. Without this information, the server would not know how much update information to include, and the update information from the initiation of the accumulator to the present might be huge.

But, this degrades anonymity: If you were the only person who updated on Friday, then your next update can be connected to that last update.

- Finally, why are users doing certain things? Suppose that two different stores are on the same street. Basic demographics suggests that users living in that area are more likely to go to those two stores than others; thus, interactions with the two different stores are correlated. To formally model this notion, we allow the adversary to control when users perform each action: the adversary tells them when to update, when to verify and to whom, etc.

This puts us in a tricky spot. We want to prove anonymity, but we know users will lose it. We thought the best thing to do is show that there are no "unexpected" anonymity losses. That is, you can expect that if you use your credentials at the same store at the same time every time, someone might guess that it's the same person. And similarly for the other issues. But no one should learn more than that.

How do you define what anonymity is "expected"? We decided that any protocol in our formalism must define an anonymity function that considers the state of the accumulator, the messages sent, and the adversary's requests, then produces an anonymity set for each message (technically, for each pseudonym). Our anonymity definition: an adversary should not be able to guess that two messages came from the same user with probability higher than just guessing among their anonymity sets.

We called this "indistinguishability". This is a strange definition and I haven't seen anything like it before, but I liked how it worked in the end.

Let's consider a trivial case, where the anonymity set of each pseudonym is precisely the user who the pseudonym belongs to. This is "indistinguishable", but only trivially: the probability of correlating two pseudonyms based on their anonymity sets is either 1 or 0, depending on whether the same user really did send those two messages. The adversary obviously cannot guess with probability any better than this! Hence, it is technically indistinguishable, but it's completely non-anonymous. So our definition doesn't quite capture anonymity.

Thus, if you construct a protocol and prove it indistinguishable, you have an open question: how anonymous is it? Luckily, because you were forced to define an anonymity function, you have a "lower bound" on anonymity based on the outputs of that anonymity function. You can then look for patterns of interactions that will ensure that the anonymity set for every message is sufficiently large.

In fact, indistinguishability also shows that an adversary needs to find these patterns as well. If an adversary only does things that completely de-anonymises users (example: there are only two users, and only one of them has a valid credential), then the adversary can never hope to win the indistinguishability game, since the anonymity sets will always be trivial! Thus, to break indistinguishability, the adversary has to do something so that the anonymity sets get large, but the adversary has more information beyond that.

If the protocol is provably indistinguishable, then we learn that whenever the anonymity sets are large, users can genuinely expect anonymity.

For ALLOSAUR, we showed that a particular pattern of user behaviour will create larger anonymity sets. What needs to happen is:

- users all request updates at the same time

- there are always enough users with valid credentials, and also always enough with invalid credentials

- users are not considered anonymous until their first update after their credential was last issued or revoked.

Since we prove that ALLOSAUR is indistinguishable, this shows that it actually provides anonymity as well, as long as users behave like this.

The other interesting aspect of our definition is that anonymity "heals". Since we define anonymity sets for pseudonyms, and users can obtain new pseudonyms at any time, then even if one pseudonym gets de-anonymized, a user can request obtain a new one and be re-anonymised. To make this concrete, if one TOR connection is part of a user's verification to vote then that connection is de-anonymized; however, their next TOR connection will be new and uncorrelated to the last interaction.

Why Nots?

Why not use a list of revoked credentials? The internet's certificate and public key infrastructure uses a list of revoked credentials. We avoided that because we imagine scenarios where this puts an unacceptable burden on the verifier, who must maintain this list. Our intended application of digital credentials likely has higher turnover of credentials, so the revocation list would be too long. On top of that, we would need a zero-knowledge proof that a user's credentials are not in the list of revoked credentials.

Why not use expiry dates? Another method that certificate authorities use is to just add an expiry date. This would work for digital credentials, but it creates two problems. If the expiry date is far in the future, then a renegade user with revoked credentials will have a larger window of time when they appear to be legitimate, but they should not be. Instead, if the expiry dates are always soon, then there is a large turnover for honest users. Either way (and especially with high turnover!) users need an anonymous method to get new credentials once their old ones expire.

Why not Merkle trees? A Merkle tree can be seen as a type of accumulator, but we didn't see good methods to efficiently revoke credentials and efficiently and anonymously update. Moreover, the size of credentials ends up logarithmic in the total number of credentials in the system, whereas in ALLOSAUR (and all pairing-based or RSA-based accumulators) they are constant-sized.

Why not use existing MPC work? The idea of using MPC for accumulators, and Nguyen's accumulator specifically, is not new. In a UC framework, it's much easier to compose the MPC building blocks with the necessary computation for the accumulator. For our purposes, we wanted fine-grained traffic analysis for the anonymity definitions and proofs, which this modular perspective does not provide. Further, the accumulator itself lets us skip a lot of extra MPC functionality necessary for integrity. Whether this provides a big practical benefit remains to be seen, since we didn't implement the core MPC functionality (yet!).

Why didn't you implement the core MPC functionality? Because we started from a Rust implementation of the accumulator, and oblivious transfer libraries in Rust are not as mature. We would like to implement this, though!

RESULTS

ALLOSAUR is anonymous. We end up with the same anonymity guarantees as an accumulator with public update data, although now with a more precise security definition. Critically, this depends on having some honest accumulator managers; anonymity can be achieved with just one honest accumulator manager (though there is an anonymity/availability trade-off).

ALLOSAUR is fast. With 5 accumulator managers, our implementation takes only 52 ms of user-side computation, 371 ms of server-side computation, and 16 kB of total communication, to update over 1000 changes. For comparison, with the Vitto and Biryukov methods this takes 369 ms of user-side computation, 12 s of server-side computation, and 80 kB of communication.

We don't do non-membership. A feature of many accumulators is the capacity to provide non-membership proofs. These are insecure for the Nguyen accumulator, actually, so we don't include them. Of course, one can simply use two accumulators: one representing users that have valid credentials, and another representing invalid credentials. Proving membership in the second proves non-membership in the first, and vice-versa.

We might be able to dodge TOR. Our formalism allows all verifiers to be corrupt and collude with accumulator managers. Hence, there should be no security loss for a user to route all of its update messages to each accumulator manager (appropriately encrypted!) through a verifier. Since the verifier need not be anonymous (at least in the applications we consider), they can send these over a regular internet connection. Hence, as long as the user has an anonymous connection to the verifier, they are anonymous! This is where I am out of my depth: how anonymous can a Bluetooth connection be? If Bluetooth is easier to anonymize than TOR (naively, it should be?) then a user could route all of its data through the verifier. This is a bit beyond our paper, but something I'm interested in and that I think might work.

Physical credentials are also anonymous. In all of this work, we shouldn't lose sight of the benefits of our existing non-digital infrastructure, which provide basically all the same anonymity guarantees as ALLOSAUR. While digital credentials offer some benefits, I worry about "solutionism": starting with a solution and finding a problem to match it. We didn't start this project because we dreamed of the convenience of a digital identity, we started it because we worried about privacy in systems that others were already deploying.

TECHNICAL

Our main contributions are formalism and definitions; our technical contribution to the protocol is straightforward.

Vitto and Biryukov defined batch update polynomials d(x) and V(x), which can be computed using just the list of revoked credentials and the previous values of the accumulator. A user can update their credential if they can obtain d(y) and V(y), where y is their pseudonymous credential in the accumulator. Thus, the previous method was to just send the coefficients of d(x) and the coefficients of V(x), and the user evaluates both and updates locally.

The polynomial d(x) is actually the unique monic polynomial with all revoked credentials as roots. If there are m revoked credentials, then d has length m+1, and it takes O(m2) time to compute the coefficients.

What we first noticed is that once a user updates once, they can update again without sending any new data to the server (they just get a second pair of coefficients for new polynomials d'(x) and V'(x), based on the next list of revocations). Thus, it makes more sense to split the update polynomials into a sequence of polynomials of some bounded degree. Communication costs are still proportional to the number of revocations (we saw about a 1% communication overhead from doing this), but the server's computational cost to make these polynomials is now O(m) (we saw the computation drop from 11.1 seconds to 0.4 seconds).

Second, if each of these update polynomials have degree at most k, then the user could send y, y2,y3, ... , yk to the server, who could evaluate each polynomial with these same elements. Thus with O(k) communication from the user, the server only needs to send back O(m/k) bits. Obviously this is non-anonymous, but what if the user used an affine secret-sharing scheme?

More specifically, the user can simply use t-out-of-n Shamir secret sharing and send shares of y, y2,y3, ... , yk to each server. The server can compute any affine function of these shares and return the result to the user, who can reconstruct the result. Specifically, the server can compute d(y) and V(y) as long as d and V have degree at most k, since that's an affine function: just multiply each power of y by the corresponding coefficient of d or V.

Once the user gets t shares back, they reconstruct each update polynomial. This means the communication to and from each server is O(k) and O(m/k), respectively, which gives a square root reduction in communication.

Even better, the user's computation is reduced, since they do not need to evaluate a polynomial of degree m, but only assemble O(m/k) results together. Especially since the coefficients of V(x) are actually elliptic curve points, this saves the user a lot of computation (with 10,000 revocations, they drop from about 4 seconds to 0.17 seconds).

Incidentally, the servers are just evaluating a polynomial which could be published publicly with no loss of security. Thus, the user can act as a trusted third party, since any dishonest user behaviour reveals nothing sensitive. This means this MPC here requires no overhead: the servers can receive the Shamir shares directly.

Here the user has a trade-off of availability against anonymity. If the user uses t-out-of-n shares, then if t dishonest servers colluded, they could reconstruct y and de-anonymize the user. Hence, the user wants t to be quite large for anonymity. However, with t-out-of-n shares, only t servers need to respond with the result of the polynomial evaluation, and that's enough for the user (and if t+1 servers respond, the user has a guaranteed integrity check). Thus, the user wants t to be small for availability.

Technically, the servers don't need to know what value of t the user chose. So (though we didn't formally address this) a user should be able to choose whatever value of t fits their individual anonymity and availability needs.